Jordan Bates • • 18 min read

5 Unexpected Reasons the Internet is Making People Dumber

The Internet once had enormous potential as a tool for education. Hell, it still does.

But somewhere along the path to the present moment, something went horribly wrong.

Instead of exposing people to new, challenging ideas and different ways of thinking, the Internet in 2017 mostly just convinces people that what they already believe is The Truth.

The Internet could have been anything, but we made it into the most effective Bias Confirmation Machine in history.

“Absurd! Balderdash! Poppycock! How could this possibly be the case?!”

Let’s find out.

From where I’m standing, there seem to be five major reasons the Internet has become a gigantic, elaborate machine that prevents genuine learning and inquiry.

1. Search engines help you find what you want to find, not conflicting perspectives

It is in the nature of search engines to give people what they want.

If you possess a particular belief and want to find evidence for your position, Google will supply you with plenty of links to smart-sounding, seemingly credible evidence supporting your belief.

“But that means I’m right, doesn’t it?”

Well, no. The kicker is that it doesn’t matter what your belief is.

In psychological jargon, confirmation bias is the human tendency to interpret, filter, and seek out new information in ways that confirm your preexisting beliefs.

Keep this concept in mind, because it’s central to this discussion. It might sound crazy, but the Internet has basically become confirmation bias’ best friend.

The Internet is now so saturated with articles and information about everything that you can find credible-sounding people defending virtually any belief imaginable. All you have to do is punch a few buttons, and Google will take you straight to confident people passionately confirming exactly what you believe.

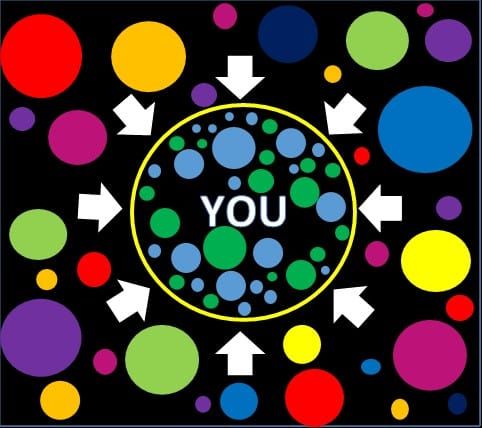

Here’s a simple illustration of this point:

Note that this effect is amplified substantially because of another fact that you may not be aware of: Google uses your browsing history and your friends’ recommendations/’likes’ to show you results it thinks you will like.

Imagine what happens when, say, a politically conservative person with mostly conservative friends who has previously clicked on many right-leaning links searches for anything politics-related on Google. What kinds of links and opinions do you think they’re presented with?

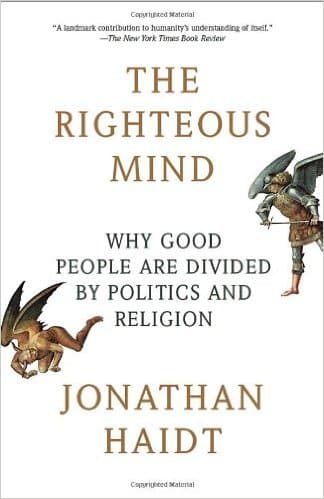

For many topics or issues, you can actually find scientific papers supporting whatever position you wish to take. Even though 97% of climate scientists agree that human-caused climate change is a real thing, climate skeptics will find no shortage of scientific papers contesting the consensus. This situation prompted Jonathan Haidt, in his book The Righteous Mind, to write, “Science is a smorgasbord, and Google will guide you to the study that’s right for you.”

Even the most absurd beliefs—e.g. the belief that Hillary Clinton is a reptilian humanoid—are passionately defended in certain corners of the Internet. You probably won’t find any remotely reputable sources defending such beliefs, but you will find extremely confident people doing so. And for many members of our rather credulous species, an extremely confident person who can string together a few sentences is sufficient reason to (continue to) believe something.

2. Social media feeds show you what you want to see, not what you need to see to become smarter

You may still be under the impression that your Facebook, Instagram, and Twitter feeds are showing you a live stream of everything posted by your friends and by pages you follow.

Think again.

Several years ago, Facebook rolled out the beta version of its newsfeed filtering algorithm. Eventually, most other major online social networks followed suit.

“Wait, what the hell is a newsfeed filtering algorithm?”

A newsfeed filtering algorithm is basically a computer program that decides which content is going to appear on your newsfeed.

These algorithms probably consider a multitude of factors, but as a rule of thumb, they show you more of the types of content you’ve been ‘liking’/clicking, posted by the same (types of) people you’ve been interacting with.

Perhaps you can imagine the bias-confirming effects of this setup.

Let’s say you’re a politically liberal person who starts using Facebook in 2016. First of all, a majority of your friends and a majority of the politics-related pages you ‘like’ are already likely going to be liberal-leaning, as we naturally seek out like-minded people/sources of information. But maybe you’re an outlier and 40% of your friends are conservatives.

When you first start using Facebook, you’re seeing a good variety of viewpoints expressed on your newsfeed. However, you overwhelmingly ‘like’ and interact with the liberal viewpoints that coincide with your current worldview and make you feel more confident in your own rectitude and rightness.

Pretty soon, your conservative friends will have disappeared from your timeline. The politics-related posts that appear on your newsfeed will be unanimously liberal, and likely many of them will express (implicit) animosity toward conservative viewpoints. This will give you the impression that you’re just so damn Right and Smart and only a few far-away misguided fools disagree with you.

Social media is way too good at facilitating this. Via Black Iron Kisses

The point is that if you’re not careful and proactive about how you use social media, it’s preposterously easy to end up in an echo chamber in which you’re constantly receiving signals that your worldview is Righteous and Definitively True, and everyone who disagrees is a misinformed moron, or worse.[1]

Facebook seems especially toxic in this regard, as its algorithm seems to update with extreme regularity in order to feed you exclusively the same opinions and information you’ve recently been responding to. Facebook almost certainly understands the filter-bubble-creating effect of its algorithm, but at the end of the day, its primary concern is how to feed people content that will keep them coming back to the website.

3. YouTube and other sites recommend content they think you’ll like, not alternate viewpoints

Maybe you’re starting to notice a pattern here…

It’s now become common practice on many websites to recommend additional content to info-consumers. Similar to newsfeed filtering algorithms, recommendation algorithms suggest more content based on what the consumer has already watched/read/responded favorably to.

The fatal flaw of recommendation algorithms is that they tend to do nothing but point to more content that supports/defends the ideas expressed in the content the user is already consuming. Sometimes this works out okay, if the user happens to be watching a YouTube lecture by Jordan Peterson, or some other thoughtful, balanced, rigorous, science-attentive thinker. Unfortunately the vast majority of people are not consuming this sort of content. Most people are consuming entertainment with proven-to-sell messaging; or agenda-driven, partisan infotainment; or silly conspiracy theories.

In these latter cases, YouTube’s recommendation algorithm will simply lead a subject further down whatever rabbit hole they’re already occupying, repeatedly confirming their preexisting world models and probably supplementing them with more partisan or spurious information.

An unfortunate soul who lacks the cognitive tools to distinguish between reputable information and fantasy may venture into YouTube to watch a video about a conspiracy theory their friend mentioned and eight hours later wind up in an utter epistemic wasteland, having filled their mind with numerous theories with little to no basis in reality. Some people already spend their lifetimes doing this, and YouTube makes it much easier to continuously convince yourself that your “truther” worldview is accurate (because these people made so many videos and they sound so sure!).

This is not to say that conspiracy theories are never true or never contain anything of value. It’s just to say that 98% of them are so obviously full of insanely unlikely or demonstrably false claims that your chances of moving closer to the truth by immersing yourself in them are next to nil.

4. Media outlets are biased, and you gravitate toward the ones that confirm your worldview

The vast majority, if not all, media outlets have some kind of ideological leaning. This isn’t always a bad thing and might be unavoidable, considering its quite difficult to run a media company without favoring certain ways of seeing and interpreting the world.

At HighExistence, we strongly value science and evidence, but I’d be remiss if I claimed we haven’t historically been biased toward certain positions: pro-psychedelic drug use, pro-spirituality, pro-self-improvement, pro-counter culture, etc. HighExistence has probably tended to lean left politically and to be fairly hostile toward organized religion.

One of the things I have attempted and continue to attempt to do as editor-in-chief of HighExistence is to illuminate some of HE’s historical blind spots, to render the publication more objective and balanced. For instance, you should know that psychedelics should be treated with reverence and caution; spirituality can be an ego trap; self-improvement has a dark side; liberals and conservatives balance each other like the Yin and Yang; and culture is your sorta-trustworthy friend.

Media bias isn’t anything new, but with the rise of the Internet, it seems to be worse than ever. As I alluded to earlier, the democratization of information-publishing that arose with the Internet means that anyone can start a website and broadcast their opinions to whomever will listen. In many ways, this has been a beautiful thing.

Unfortunately, though, this phenomenon has also amplified the voices of millions of straight-up ideologues, crackpots, and conspiracy theorists. There’s now a whole lot more of bullshit information that one needs to sift through in order to find the proverbial chocolate chip cookies.

Unless you’re really good at identifying credible sources and filtering out horse shit, it’s really easy to end up following a host of information outlets that consistently present rankly partisan or unverified/unverifiable information as if it is the Absolute Truth.

If you’re not careful, you can delude yourself into thinking you’re following objective sources, when in fact you’ve just unconsciously gravitated to the media outlets that favor your particular ideological leaning and consistently publish stories that validate your current worldview (thanks, confirmation bias!).

Perhaps you’re thinking, “Haha, yeah, well, I don’t have to worry about that because I only read reputable newspapers like the New York Times.”

In that case, you may want to consider the following, taken from this article by Michael Cieply, an ex-editor for the New York Times who worked there for nearly 12 years:

“It was a shock on arriving at the New York Times in 2004, as the paper’s movie editor, to realize that its editorial dynamic was essentially the reverse [of the LA Times]. By and large, talented reporters scrambled to match stories with what internally was often called “the narrative.” We were occasionally asked to map a narrative for our various beats a year in advance, square the plan with editors, then generate stories that fit the pre-designated line.”

Yep, that’s right. Even massive media outlets generally considered to be reputable are often disconcertingly biased. In the case of the Times, the paper not only leans left, but according to Cieply, stories are actually selected and tailored to fit a preexisting narrative that the high-ups at the Times wish to propagate.

Scary, right? It’s dangerous to go alone. Take this: one of the best lists I’ve found of the least biased media sources. The website on which this list is found is also a useful tool for quickly checking the reliability and political bias of a given news source.

5. The current model of Internet publishing encourages the creation of shitty, shallow clickbait content

Media bias would be bad enough, but the problem is intensified all the more by the janky-ass ad-driven profit model of online publishing.

Most websites make money through advertising, which results in an insidious numbers game: The more traffic you get, the more money you make.

Thus, online publishers are not incentivized to create the truest, most in-depth and high-quality content they can. Rather, they’re incentivized to create whatever makes people click and share — in other words, clickbait.

The psychology of clickbait is quite interesting and kind of depressing.

As you might guess, for various reasons, we humans tend to click on listicles and headlines which create a “curiosity gap”—i.e. headlines which create a kind of cliffhanger, making us really want to know what happens next.

That’s not all, though. What’s really pernicious about clickbait is that it also works extremely well if it arouses our emotions and/or expresses an extreme sentiment, either positive or negative.

This situation encourages publishers—even supposedly high-quality journalistic outlets—to toss detached objectivity out the window and gravitate toward making constant extreme statements designed to trigger people emotionally.

And given how fickle and fragmented our collective attention has become due to information overload, the actual content of articles has become shorter, shallower, dumbed-down. Much of it is merely brief commentary on some tweet or sound bite that is likely to rile up the intended audience and fuel our noxious online culture of outrage and mob justice.

This is especially true nowadays in politics, a domain in which most journalistic outlets have all but ceased discussing the truly important issues of our time in favor of treating politicians as reality TV stars and publishing rankly partisan gossip about whatever ephemeral scandal seems most likely to increase traffic. (Or they just, you know, invent a provocative fake news story and run with it.)

Donald Trump, ironically an actual reality TV star, is a modern clickbait “journalist’s” wet dream—a perpetually spurting fountain of scandalous tweets and sound bites that make for perfect clickbait.

But what are these substanceless clickbait articles actually doing? Are they helping anything? Or are they actively hurting things—making us dumber, distracting us from what really matters, and embroiling us in meaningless political theatrics? Is it a coincidence that Trump received vastly more free press coverage than any other political candidate, due to his seemingly tactful use of political incorrectness, and came out of nowhere to win the presidential race?

Let’s not forget that social media feeds and search engines show you what you want to see, meaning that during the last election most Democrats saw nothing but Trump-bashing clickbait that validated and amplified their distaste for Republicans, while most Republicans saw the precise opposite.

It was in Google and Facebook’s best interest to facilitate this, algorithmically. After all, targeted political clickbait confirming people’s worst suspicions about The Other Team drives clicks. It also drives outrage and hostility. A savvy observer might ask, “Hmmmm, wonder if this phenomenon has anything to do with the preposterous level of polarization between right and left that we’re currently observing in the United States?” More on that in a moment.

A perceptive observer might also note at this point that I got you to click and read this article using a fairly clickbait-y headline. Hahah! My master plan is complete! Nah, jk. In the current online environment, it has become almost impossible to abstain from clickbait conventions, if one wishes to actually have people read and share what you write. I don’t think clickbait headlines are inherently evil, so long as they’re driving traffic to something genuinely substantive and worthwhile (hopefully this article qualifies). I don’t even have a real problem with harmless silly clickbait leading to cat pics or whatever. The Internet should be fun.

What I do have a problem with is clickbait that is actively spreading misinformation for traffic, promoting dumbed-down black-and-white thinking, catalyzing intense and useless outrage, pandering to people’s darkest fears about The Other Team, and stoking the all-consuming political flame war that is tearing the US apart at the seams.

The Kicker: People aren’t wired to want the truth

In The Righteous Mind: Why Good People are Divided by Politics and Religion, Jonathan Haidt assembles an impressive body of evidence suggesting that human beings did not evolve to be rational truth-seekers.

Our rational minds are not scientists dispassionately seeking objective facts; rather, they are more like lawyers that devise post hoc arguments to justify what we intuitively desire or feel is right.

Haidt argues convincingly that people are driven much more by intuition than reason, and that we evolved the capacity to reason primarily in order to provide explanations to other people for our intuition-driven actions.

Haidt explains that when you ask people moral questions, time their responses, and scan their brains, their answers and brain activity patterns indicate that they reach conclusions rapidly and produce reasons later only to justify what they’ve already decided.

This is bad news for anyone clinging to a faint hope that the average person is likely to wade valiantly through the Internet’s vast swamplands of bullshit in order to find beliefs backed by evidence and sound arguments.

It turns out that we probably aren’t even wired to want the truth. Most of us just want to find the best reasons to think that what we already believe is correct. We filter out information that conflicts with our beliefs and cling desperately to information that validates us.

Oh look, it’s our old friend, confirmation bias! Confirmation bias is so strong that people often strengthen their prior beliefs in the face of contradictory evidence. This phenomenon is known as the backfire effect, and unfortunately, it renders most (online) debates useless.

Unless people are well-aware of these biases and/or extraordinarily committed to challenging and refining their own world models, presenting them with evidence which conflicts with their worldview will most likely make them cling even tighter to their prior beliefs.

Thus, the kicker: Not only is the Internet an ultra-effective Bias Confirmation Machine, but most people already want nothing more than to confirm their own biases.

The Gigantic Problem With This

As you can plainly see, this is a disastrous state of affairs for anyone who values truth and wishes for the general human population to move closer to it.

Instead of promoting good-faith truth-seeking, the Internet has in many ways become a machine which reinforces what people already believe and shuts out anything new or challenging.

Maybe you don’t think this is such a problem: “Eh, fuck truth. Who cares if people are updating their beliefs VS becoming ever more entrenched in their way of thinking? Humans are dumb anyway; what could it hurt?”

Well but see there’s a bigger problem here. Even if you don’t really value truth, you probably value life and the continuation of our species.

And the thing is, inflexible, dogmatic beliefs have probably been the leading cause of murder throughout human history.

You know, there were all those (religious) wars and conflicts that basically boiled down to one group going, “Hey, those people over there believe something different from what we believe. What we believe is the Absolute Truth, so they’re clearly misguided heretics and the world would be better off without them. Let’s go fuck their shit up.”

Obviously I’m being kind of facetious, but in truth, a lot of killing has happened because “those other guys over there don’t believe what we believe.”

And don’t even get me started on the inflexible beliefs which undergirded the largest mass murders in history in the 20th century. For Hitler and company it was, “Jews are a lesser race of humans and must be exterminated so we can purify our population.” For Stalin’s Russia and Mao’s China it was, “Communism is the Best Shit Ever; communism by any means necessary.”

Historians estimate that these three men—Hitler, Stalin, and Mao—were responsible for the deaths of approximately 80 MILLION people.

Let that number sink in for a moment. It’s almost incomprehensible.

“But we learned from those tragedies, didn’t we? Stuff like that can’t happen anymore, right?”

I wish that were true.

As I type this, ISIS and other similar groups hold certain inflexible beliefs such as, “Anyone who doesn’t believe in the One True God Allah is infected with sin and impurity. These people must be converted to belief in Allah or destroyed.” If they had it their way, all evidence indicates that ISIS—which has roughly ~30,000 – 200,000 members—would convert or murder the planet’s 6 billion non-Muslims.[2]

Imagine what these people would do if they got their hands on some nukes or nanotechnology or bioweapons or superintelligent AI—technologies which either exist now or are projected to exist in the not-too-distant future and which are considered among the greatest threats to the human species, threats which could bring about the extinction of human beings as well as most/all life on Earth.

I shudder to consider.

Maybe ISIS is too far-off an example for you. In that case, consider again the present political moment in the United States. People are at each other’s throats right now. Sadly, this isn’t mere metaphor. Politically motivated violence has erupted with disturbing frequency since the election of Donald Trump, on both sides of the political aisle (see here and here). We’re seeing an inferno of political tribalism unmatched in recent memory.

And guess what? Somehow, millions of people on both sides are absolutely convinced that their side possesses the Correct Interpretation of the World. Many are so convinced, in fact, that they’re willing to harm those who disagree with them. Sound familiar? Good ol’ humans, acting out the same toxic, played-out scripts for millennia on end.

But wait, how could this be? We live in the Internet Age—a time in which we’re just a click away from mountains of compelling evidence and arguments that can make us realize the incompleteness of our current worldview. In theory, yes. In practice, hell no, for all the reasons I listed above.

You can bet your ass that the Internet Bias Confirmation Machine has a lot to do with the unholy degree of political tension in the US right now. People are straight-up trapped in their filter bubbles and echo chambers (two slightly different concepts which for our purposes mean the same thing)—their algorithmically-crafted courtrooms in which juries of yes-men nod vigorously at their every verdict. They go on the Internet and all they see is a steady stream of signals telling them that their current beliefs are Right and Good, and The Other Team’s beliefs are Wrong and Evil. What a time to be alive.

A Super Short and Sweet Conclusion

At over 4,200 words, this has been a long-ass article. If you’re still with me, you fucking rule!

In sum, the Internet is a beautiful place, but it’s also broken in some pretty serious ways.

The current architecture of the web tends to result in most users encountering a lot of information which is either biased, inflammatory, spurious, shallow clickbait or which does nothing but validate their current worldview.

As I explained, this has shitty consequences. It fuels dangerous dogmatism and divisive tribalism while simultaneously rendering good-faith truth-seeking and intellectual humility increasingly rare.

Luckily, there are ways of combatting this set of phenomena. Simply by reading this article and becoming aware of these issues, you have come a long ways toward transcending their harmful influence.

There are other additional methods you can use to avoid becoming Just Another Fool on the Internet. These methods revolve around science, rationality, and skepticism, and I will discuss them in a follow-up to this article, so stay tuned.

As Nobel-prize-winning theoretical physicist Richard Feynman once said, “The first principle is that you must not fool yourself—and you are the easiest person to fool.” I sincerely hope you will take these words to heart and use the web in ways which make you smarter, not the opposite.

Here’s to co-creating a better online world and a more intelligent, cooperative, sustainable humanity. Peace.

Click here to read Part Two of this series!

Follow us on Facebook, everyone is welcome.

Follow Jordan Bates on Facebook and Twitter.

Further Study: The Righteous Mind: Why Good People are Divided by Politics and Religion by Jonathan Haidt

The book I mentioned a couple times in this post — The Righteous Mind: Why Good People are Divided by Politics and Religion by Jonathan Haidt — is one of the best books I’ve ever read. It’s a book that will jar you out of your filter bubble. A book I truly wish everyone in the world would read. If everyone did, I think the world would become more peaceful and cooperative. If you’re so inclined, you can buy the book on Amazon or read the key insights for free on Blinkist.

Footnotes:

[1] Politics seems to be one of the domains in which people are most likely nowadays to wind up in an echo chamber. Use this knowledge wisely.

[2] Dogmatic beliefs aren’t the whole story of why ISIS and similar groups want to exterminate most humans. Rather, dogmatic beliefs tend to intertwine with extreme tribalism—a subject I wrote about at length here—to create the most dangerous groups in the world.

Jordan Bates

Jordan Bates is a lover of God, father, leadership coach, heart healer, writer, artist, and long-time co-creator of HighExistence. — www.jordanbates.life

![Seneca’s Groundless Fears: 11 Stoic Principles for Overcoming Panic [Video]](/content/images/size/w600/wp-content/uploads/2020/04/seneca.png)